Types of Image Annotation for Artificial Intelligence and Machine Learning

For computer vision, there are many types of image annotations out there, and each of these annotation techniques has different applications.

With these different annotation methods, are you curious about what you can achieve? Let's take a look at the various methods of annotation used for applications of computer vision, along with some special use cases for these particular forms of computer vision annotation.

Types of Annotations

We need to be familiar with the various image annotation approaches themselves before we delve into use cases for computer vision image annotation. Let's review the most common techniques for image annotation.

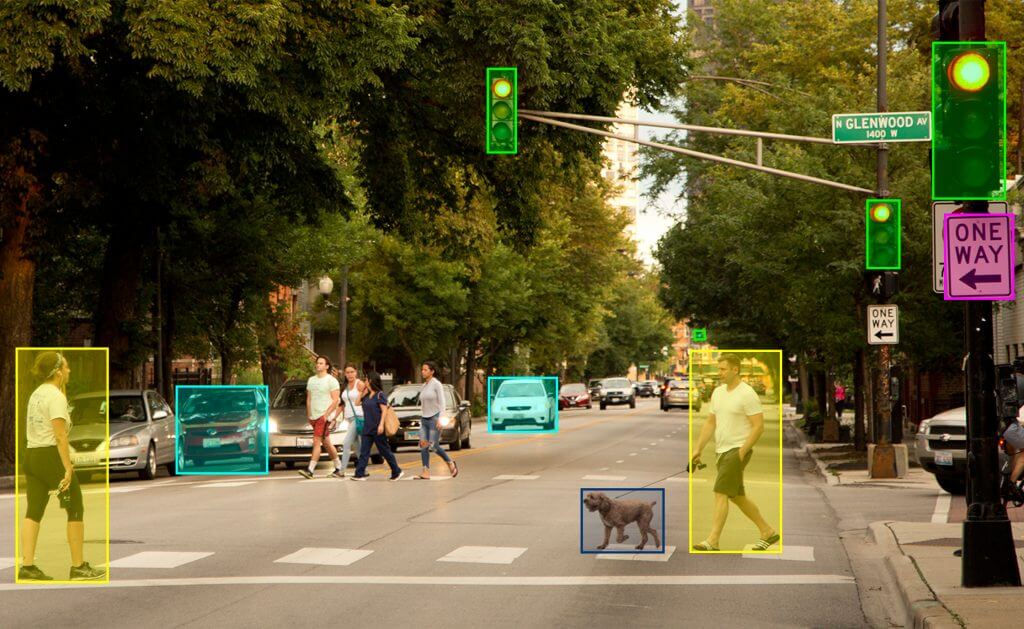

1. Bounding Boxes

Bounding boxes are, due in part to their flexibility and simplicity, one of the most widely used forms of image annotation in all computer vision. Bounding boxes enclose objects and help localize objects of interest to the computer vision network. They are simple to construct by simply defining the X and Y coordinates for the box's upper left and lower right corners.

2. Polygonal Segmentation

Polygonal segmentation is another form of image annotation, and the theory behind it is merely an extension of the theory behind bounding boxes. Polygonal segmentation informs a computer vision device where to search for an object, but the position and boundaries of the object can be defined with much greater precision due to the use of complex polygons and not simply a box.

The benefit of using polygonal segmentation over bounding boxes is that it takes out a significant portion of the object's noise/unnecessary pixels that can potentially confuse the classifier.

3. Line Annotation

The formation of lines and splines, which are mainly used to delineate boundaries between one part of an image and another, includes line annotation. Where a region that needs to be annotated may be thought of as a boundary, line annotation is used, but for a bounding box or another form of annotation, it is too small or thin to make sense.

Splines and lines are simple to establish annotations for situations such as teaching warehouse robots to identify discrepancies between sections of a conveyor belt or to recognize lanes for autonomous vehicles and are widely used for them.

4. Landmark Annotation

For computer vision systems, the fourth form of image annotation is landmark annotation, often referred to as dot annotation, due to the fact that it requires the formation of dots/points throughout an image. To mark objects in images containing several small objects, only a few dots may be used, but it is usual for several dots to be joined together to represent an object's outline or skeleton.

The size of the dots can vary, and often larger dots are used to differentiate significant/landmark areas from surrounding areas.

5. 3D Cuboids

Similar to bounding boxes, 3D cuboids are a powerful type of image annotation in that they differentiate where objects should be searched for by a classifier. In addition to height and width, however, 3D cuboids do have depth.

Usually, anchor points are located at the edges of the piece, and a line fills up the space between the anchors. This provides a 3D representation of the object, meaning that in a 3D environment, the computer vision system can learn to discern features such as volume and location.

6. Segmentation Semantic

Semantic segmentation is a type of annotation of images that involves splitting an image into different regions, assigning each pixel in an image to a mark.

Separate from other regions, regions of a picture that bear different semantic meanings/definitions are considered. For instance, "sky" could be one portion of a picture, while "grass" could be another. The main idea is that regions are specified on the basis of semantic information and that every pixel comprising that region is given a label by the image classifier.

Use Cases For Image Annotation Types

1. Bounding Boxes

In computer vision image annotation, bounding boxes are used for the purpose of helping networks localize artifacts. Bordering boxes benefit from models that localize and identify items. Popular bounding box uses include any situation where objects are being tested against each other for collisions.

Autonomous driving is an obvious implementation of bounding boxes and object detection. Autonomous driving systems need to be capable of identifying vehicles on the road, but they may also be used to help assess site safety in circumstances such as marking objects on construction sites and to identify objects in various environments for robots.

Bounding box use cases include:

Using drone footage to monitor the progress of building projects, all the way from the initial laying of foundations to the completion when the house is ready to move in.

To automate aspects of the checkout process by identifying food goods and other items in grocery stores.

Detecting damage to outdoor vehicles, allowing for a thorough review of vehicles as claims for insurance are made.

2. Polygonal Segmentation

The method of annotating objects using several complex polygons is polygonal segmentation, allowing objects with irregular shapes to be captured. Polygonal segmentation is used over bounding boxes when precision is of value. Since polygons can catch an object's outline, they minimize the noise inside a bounding box that can be found, something that can theoretically throw the model's accuracy away.

In autonomous driving, polygonal segmentation is beneficial, where irregularly formed items such as logos and street signs can be highlighted and cars more precisely located compared to the use of bounding boxes to locate cars.

For tasks where several irregularly formed objects need to be correctly annotated, such as object detection in images captured by satellites and drones, polygonal segmentation is also useful. Polygonal segmentation should be used over bounding boxes if the purpose is to detect artifacts such as water features with accuracy.

In computer vision, notable use cases for polygonal segmentation include:

Annotation of the many artifacts found in cityscapes that are irregularly shaped, such as vehicles, trees, and pools.

Polygonal segmentation can also make artifacts easier to detect. For example, a polygon annotation tool, Polygon-RNN, sees substantial improvements in both speed and precision compared to the conventional methods used to annotate irregular shapes, namely semantic segmentation.

3. Line Annotation

Since line annotation is about drawing attention to lines in an image, it is best used if significant characteristics are linear in appearance.

A common case of use for line annotation is autonomous driving, as it is used to delineate lanes on the route. Similarly, line annotation may be used to direct industrial robots to position certain items between two lines, designating a target region. For these purposes, bounding boxes could potentially be used, but line annotation is a much cleaner option since it eliminates much of the noise that comes from bounding boxes being used.

Notable examples of line annotation for computer vision use include the automated identification of crop rows and even the monitoring of insect leg positions.

4. Landmark Annotation

Because landmark annotation/dot annotation draws small dots representing items, small objects are detected and quantified as one of its key uses. For example, the use of landmark detection to find objects of interest such as vehicles, buildings, trees, or ponds can be needed for aerial views of cities.

Having said that, landmark annotation may also have other applications. Combining several landmarks together, like a connect-the-dots puzzle, will produce outlines of objects. It is possible to use these dot outlines to identify facial features or to examine people's motion and stance.

Some computer vision use instances for annotation of landmarks are:

Thanks to the fact that tracking several landmarks will make it easier to identify feelings and other facial features, Face Recognition.

Landmark annotation is also used for geometric morphometrics in the field of biology.

5. 3D Cuboids

When a computer vision system not only has to identify an object, 3D cuboids are used, it must also predict the general shape and volume of that object. When a computer vision system is designed for an autonomous system capable of locomotion, 3D cuboids are more often used, since they need to make assumptions about objects in their surrounding world.

The use of 3D cuboids in computer vision involves the development of autonomous vehicle computer vision systems and locomotive robots.

6. Semantic Segmentation

A potentially unintuitive fact about semantic segmentation is that it is essentially a classification form, but rather than an entity, the classification is only performed on every pixel in the desired area. When this is considered, it becomes easy to use semantic segmentation for any role where it is appropriate to classify/recognize sizeable, distinct regions.

One application of semantic segmentation is autonomous driving, where the AI of the car must differentiate between road sections and grass or sidewalk sections.

For semantic segmentation, outside of autonomous driving, additional computer vision use cases include:

To detect weeds and particular types of crops, the study of crop fields.

Medical image recognition for diagnosis, identification of cells, and measurement of blood flow.

To strengthen conservation efforts, monitoring forests and jungles for deforestation and biodiversity disruption.

Conclusion

It is only a matter of choosing the right resources for the job that almost anything you want to do with computer vision can be accomplished. The best thing to do now that you have become more familiar with the different forms of image annotation and potential use cases for them is to conduct an experiment to see which annotation strategies function best with your application.

You can also book a free consultation with TagX to consider the right annotation for your project.